Big thanks to Matt Biggar, Ph.D. and Nicole Ardoin, Ph.D., a Pisces Foundation grantee, for the tremendous collaboration in writing this article on collective impact recently published in the Stanford Social Innovation Review. At the Pisces Foundation, we believe in harnessing the power of collaboration so that more schools and communities can tap into the many benefits of environmental education. When it comes to getting the environmental know-how our kids need to become well-prepared stewards of their rapidly changing world, environmental education delivers—whether in a classroom, canoe, or anywhere in between. In order to make environmental education part of every child’s experience, not just some, collaboration is key.

(This article first appeared on the Stanford Social Innovation Review on May 16, 2017: https://ssir.org/articles/entry/collective_impact_on_the_ground)

Collective Impact on the Ground

An in-depth look at an environmental education collaborative during the early stages of its collective impact process.

By Matt Biggar, Nicole M. Ardoin, & Jason Morris | May 16, 2017

In early 2013, funders, environmental educators, and researchers crowded elbow-to-elbow around a 20-year-old redwood forest shelf fungus. On the 23rd-floor conference room of a San Francisco skyscraper, a skilled educator engaged the group in conversation around this object. Hushed tones filled the room, punctuated by the easy laughter and engaged questions one would expect from a collegial group.

Yet, the group hadn’t always looked this way. Just a year and a half earlier, members of the group sat stiffly in office chairs as they wrestled with an exciting, yet daunting, question: What might be possible if their organizations worked together to increase the impact of environmental education across the San Francisco and Monterey Bay regions?

Two years after beginning their collaborative work, the group—now called ChangeScale—had developed a vision statement, business plan, and set of activities aligned to measurable goals. Supported by a backbone organization, ChangeScale’s core organizational partners committed to bi-monthly, daylong meetings, which built strong relationships and trust.

Since the collaborative’s inception, ChangeScale had used the five conditions of the collective impact approach to guide its work: a common agenda, shared measurement systems, mutually reinforcing activities, continuous communication, and a backbone support organization. ChangeScale pursued this work with an underlying commitment to equity—a key collective impact principle. (See “A Brief History of ChangeScale” at the end of the article.)

Since the collective impact framework was introduced in a 2011 Stanford Social Innovation Review article by John Kania and Mark Kramer, hundreds of collaboratives around the world have used it to structure approaches to persistent social issues.1 Being a relatively new social-change framework, however, only a thin basis of empirical research exists.2

Concern about the rapid proliferation of this approach has prompted a call to strengthen the research core, reflect critically on the situations in which the approach may be more or less effective, and identify potential stumbling blocks.3 Researchers from Columbia University’s Teacher College, for example, note that, “Knowledge of collective impact—what it entails, what obstacles it faces, and how to overcome them—is limited,” and that “while optimistic accounts of collective impact reforms are plentiful, insightful details about how organizations carry out the ventures, how they take root, and what impact they have remain rare.”

To address this knowledge gap, we examined the unfolding of a collective impact process during the first three years of ChangeScale’s development.4

ChangeScale’s leadership set out to specifically address the collective impact conditions in designing the initiative. Indeed, our study found evidence of those conditions when examining ChangeScale’s initial phase: The nine collaborative partners spent the first two years developing a common agenda, which was comprised of a strategic plan and vision statement with six crosscutting goals.

In ChangeScale’s third year, the partners initiated mutually reinforcing activities designed through working groups and outlined in the collaborative’s planning documents. Frequent, open, and transparent communication characterized the condition of continuous communication. Supporting the communication, planning, and coordination needs of the collaborative, backbone support evolved over the first three years into a full-time director position, as well as additional support staff. By late 2014, specific, shared measurements had not yet been established. As partners recognized those measures as the critical next step, in December 2014, ChangeScale established a working group on measurement and evaluation.

Our findings suggest that the five collective impact conditions were instrumental to enhancing, strengthening, and bolstering ChangeScale’s success. Concurrently, our research uncovered differing perspectives on the direction and progress of those aspects. Such differences suggest potential limitations of formal, long-term collaboration guided by the collective impact approach, raising questions about strict adherence to the conditions.

In this article, we discuss dilemmas that arose during the collective impact process, with a focus on the problem-defining and early implementation stages. Building on themes from Columbia University’s review of the history and theory of cross-sector collaboration in education, we make several suggestions of how to address those dilemmas in a collaborative process.

Defining the Problem

Clearly defining a problem and developing a shared understanding of, and focus on, that problem can be difficult for collaboratives, even those with high levels of trust and collegiality. The Columbia University researchers caution against assuming that good attendance and camaraderie alone indicate shared understanding of and agreement on the problem or issues among organizations in a collective impact initiative. Our research provides examples of how different members discussed and viewed the problems to be addressed, almost three years into a collective impact initiative.

ChangeScale members described the primary problem using a range of terms, suggesting potentially divergent perspectives. Some suggested that the most urgent problem was limited to within-sector collaboration; others emphasized the need to reach out to partners beyond the environmental education sector. Some members indicated that the greatest need was to build research-based practices or strengthen research-and-practice connections. Others raised concerns related to the need for more consistent messaging and advocacy for the environmental education field, with a goal of enhancing political will to support environmental education-related causes.

The need to enhance equitable access to environmental education programs was also seen as a key issue for the collaborative to address. Some members articulated overarching issues, such as a need to enhance environmental literacy among the populace or to address environmentally related problems—such as climate change or drought—requiring individual and collective action.

Without clearly determining the problem to address, a collaborative can have difficulty developing a plan of action. Without reaching specificity on the problem at hand, a collaborative can be challenged to agree on common shared-measurement indicators. With ChangeScale, varying understandings of the problem contributed to developing a multifaceted agenda, which proved difficult to implement and measure initially.

In this situation, classifying problems as either “technical” or “adaptive” may provide a perspective that facilitates functionality, including indication of the level of appropriateness of the collective impact approach. Social problems can be technical—well-defined with known answers that can be implemented by a few organizations. Alternatively, problems may be adaptive—complex with unknown answers, requiring many organizations to bring about change.

Similar to adaptive problems, wicked problems5 are large in scope and scale, lack clarity on the problem’s dimensions, and have a range of possible solutions. The collective impact approach is particularly appropriate for adaptive or wicked problems as it addresses issues through an iterative, flexible, crowd-sourced lens, leveraging the strengths of multiple organizations.

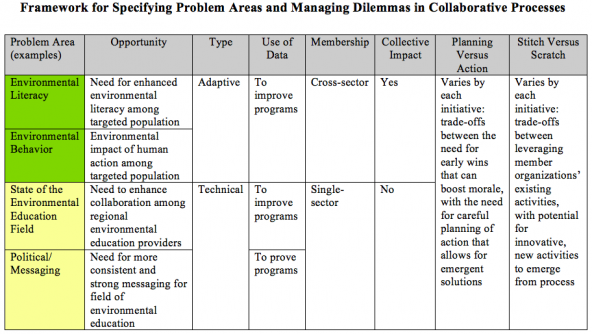

In our framework, we identify issues that ChangeScale members commonly discussed in meetings and that, subsequently, formed the collaborative’s common agenda. (See “Framework for Specifying Problem Areas and Managing Dilemmas in Collaborative Processes” at the end of the article.) We assess whether each of those issues might be considered technical or adaptive and, by this same measure, whether each may be appropriately addressed by a collective impact approach.

Agreement on a singular, primary problem—rather than a group of related problems—may help collaboratives decide on the best overall approach to make targeted progress.

Many of the issues described, such as the need for enhanced collaboration among environmental education providers, might be considered more technical and, therefore, may not be as appropriate for a collective impact approach. Those issues related to enhancing environmental literacy or mitigating the negative impact of human action on the environment may be considered more adaptive; as such, they may be ripe for a collective impact approach.

Defining and agreeing to a specific problem early in the process, and being clear about the type of problem being addressed, is critical to providing direction for a collaborative. Agreement on a singular, primary problem—rather than a group of related problems—may help collaboratives decide on the best overall approach to make targeted progress. A common understanding of the problem and how to approach solving it is included in the definition of a common agenda for collective impact. Our research emphasizes the importance of focusing on the problem-defining stage of a collaborative process.

Four Potential Dilemmas

Our research identified four pivotal dilemmas emerging from the early stages of the collaborative process. We characterize these as: inviting single or cross-sector membership in the collaborative; using data to prove versus improve; deciding when to pursue planning versus action stages; and pursuing collaborative activities through combining existing activities versus taking up new activities.

1. Single Versus Cross-Sector Membership

ChangeScale formed as a single-sector collaborative, drawing initial membership from nonprofit environmental and education-focused institutions. Because the initial purpose of the collaborative was to build the environmental education field’s efficacy at the regional scale, the core founding members felt the need to establish an initial collaboration among environmental education providers before involving other sectors, such as government or business.

Although the members of the collaborative in the initial phase were all from a single sector (nonprofit), ChangeScale partners were diverse in other ways: size of budget, number of staff and visitors, and physical footprint; scope of mission, programs, and geographic scale; and proportion of overall activities focused on environmental education.

The collective impact approach emphasizes that collaboration across sectors is critical to address adaptive problems; in this way, collaboratives can generate creative solutions. ChangeScale’s core members did consider including members from other sectors at the outset. In multiple meetings, members discussed potential partners such as school districts, government agencies, utility companies, health care providers, and juvenile justice programs. ChangeScale partners indeed understood the importance of the cross-sectorial tenet, yet they decided not to pursue this approach initially because it seemed important to first develop internal-sector collaboration and articulate shared priorities.

At the end of its third year, ChangeScale moved toward creating cross-sector partnerships between school districts and environmental education providers. This cross-sector move was made in order to increase equitable access to environmental education, a more adaptive problem.

Benefits and drawbacks exist with initially taking a single-sector approach. One of the benefits is that the single-sector approach enables close relationships to develop between organizations within the core sector before others are included. However, bringing in organizations from other sectors at later stages may increase the difficulty of meaningfully incorporating other sectors’ needs once the initial groundwork has been laid. This two-stage process may also create a tiered membership: by virtue of their sector, some organizations may feel more invested in the collaborative than others.

2. Data to Prove Versus Improve

A second dilemma that our research surfaced is the tension between data designed to prove the value of an activity or process (such as to document, explain, and justify the value of environmental education) and data designed to improve an activity or process (such as to iteratively adapt and enhance the quality of an environmental education program or improve access, overall, to environmental education).

Some ChangeScale members described needing data to prove the value of their programs through, for example, supporting consistent, impactful messaging about outcomes of environmental education. In this case, the data collected might demonstrate how environmental education enhances environmental stewardship or academic outcomes, such as grades, focus on school-related tasks, and cooperation with peers.

At other times, members discussed needing data that contributed to programmatic and sector improvement. In those cases, measurement might involve tracking participant numbers and demographics or program characteristics. Measurement might also include assessing changes in participants’ environmental knowledge, attitudes, skills, and actions. Data generated from such measures may be used to identify gaps or areas for improvement.

ChangeScale members described the value of common tools and systems to coalesce data across institutions and programs, yet they found it difficult to work toward a shared measurement system without being explicit about their data-use, collection, and dissemination approaches.

Deciding how to use data may also help a collaborative in the foundational phase determine what type of problems it is trying to address and whether a collective impact approach—or some other problem-solving approach—may be most useful. Using data to prove the value of programs may better align with addressing technical problems, such as the need for more impactful messaging or advocacy for the programs. The use of data for improvement and learning is perhaps more consistent with addressing an adaptive problem.

Being explicit, initially, in deciding on a data-use approach may create more clarity within a collaborative and help it move toward shared measurement and common indicators. This may also be an important consideration in deciding whether the collective impact approach fits with the collaborative’s direction.

3. Planning Versus Action

A third dilemma is between those who want to take time to plan, versus those who want to move more quickly to action. This is similar to the tension that Melody Barnes and Paul Schmitz describe between urgency and patience. During ChangeScale’s first three years, the members remained committed to a thoughtful planning process that required patience among members seeking action. ChangeScale’s approach was process-oriented and focused on building relationships, openly sharing opinions, working through issues, and crafting a vision statement.

By the end of ChangeScale’s second year, the founding group had met 14 times, and some partners expressed frustration with a perceived hesitancy to pursue bold, decisive action. This tension between the desire to get the planning right and get to action—not uncommon in collaborative endeavors—began to negatively impact some ChangeScale partners’ drive and enthusiasm for the initiative.

ChangeScale’s experiences—along with those of other education-focused collective impact initiatives6—make evident the time-consuming nature of collaborative-planning processes. Building trust, understanding, and a vision requires deep commitment over a sustained period, especially when individuals and/or organizations without prior professional or personal relationships within the collaborative structure undertake such a process.

Our study documents how difficult it can be for diverse partners, even those within the same sector, to agree on common, coordinated action. Patience and a long-term perspective are essential to a successful collective impact process. These elements, which emphasize emergent strategies over initial action, allow time to lay the groundwork that is critical for sustained effectiveness.

Building a forward-looking narrative is a hallmark of a successful collective impact endeavor.8Because frustration can build when action occurs slowly, early wins are necessary to demonstrate the value of collaboration and maintain momentum.7 ChangeScale did not implement on-the-ground actions until the middle of the third year, which may explain some of the members’ declining morale.

4. Existing Activities Versus New Activities

A fourth dilemma involves the tension between planning new, innovative activities through a collaborative versus building on member organizations’ existing activities.

During the three years of ChangeScale’s first phase, members debated whether to engage in new actions or combine existing actions. Many ChangeScale members, funders, and other regional partners expected the collaborative process to generate new solutions. Yet, the potential for leveraging ongoing, differentiated activities within the member organizations existed, thanks to the strong presence of the members in the region. In the collaborative’s quest for model school district partnerships, for example, member organizations shared their experiences with prior cross-sector projects that involved environmental education providers and schools.

The collective impact approach stresses mutually reinforcing activities based on partner organizations’ current activities. The idea of creatively generating emergent ideas for action, however, often appeals to funders and partners seeking innovative approaches. Some of the hesitancy in getting to action may be an emphasis on additive work. ChangeScale members expressed concern about activities requiring buy-in from their organizations, specifically related to whether those might demand additional resources from already-strained staff and budgets.

Although new activities present a number of challenges, the question remains as to whether building on existing activities through enhanced coordination can, in itself, lead to different results or whether entirely new activities are needed to make the desired, often-dramatic, changes.

Moving to Coordinated, Focused Action

Coordinated action can be difficult to achieve, even in a climate of trust and with strong working relationships. After the initial collaborative phases, ChangeScale began to move slowly, but deliberately, toward action. At this point, the group emphasized refocusing and redefining its collective work, as well as determining whether and how to employ the collective impact framework.

For ChangeScale, developing a business plan, along with leveraging findings from the research study described in this article, helped codify the collaborative’s focus. The plan addresses the technical problem of enhancing collaboration among regional environmental education organizations through hosting regular convenings and workshops. The plan also addresses the adaptive problem of growing environmental literacy among the region’s populace through developing a regional school-partnerships initiative with the goal of reaching 150,000 students over the next five years. This initiative involves bringing together organizations to collectively work with a targeted, common population, increasing the potential for a cross-sector, collective impact approach.

In consideration of these different levels of work and problems being addressed, the role of ChangeScale is morphing into that of a network hub, offering technical assistance and support to a range of regional environmental education providers. ChangeScale is also developing and supporting local school partnerships that want to apply collective impact principles.

Framework for Collaborative Processes

By sharing some of the challenges inherent in the collective impact process, we hope other organizations and partners participating in similar collaboratives might confront these dilemmas more directly and explicitly. In doing so, we hope they might make decisions early in the process that enhance their collaboratives’ effectiveness. To facilitate this process, we developed a collaborative decision-making framework that specifies these conditions and areas of tension.

The framework we developed suggests that focusing on a specific problem may impact many dimensions of collaborative work. If a collaborative (or subgroup within a collaborative) focuses on a problem that is either adaptive or technical, the collaborative can then better determine the appropriateness of a collective impact approach. The framework may also help members of the collaborative decide whether it is more appropriate to have single- or cross-sector membership from the outset, find a balance between planning and action, and focus on new or existing activities early in the process. Those considerations contribute to developing an approach that will help sustain momentum in later stages of collaborative work. The core partners can revisit how to approach the dilemmas as needs and context change over time.

We do, however, caution against strictly adhering to this framework. Within each dilemma or decision, the answer may not simply be one choice or another; rather, the utility may come from deliberating on the trade-offs inherent within each dilemma and being explicit about making choices to guide the collaborative’s work.

Expanding Knowledge on Collective Impact

Our research indicates that the collective impact approach needs to provide clearer direction of how, and to what level of specificity, collaboratives define the problem to be addressed. Clarifying the essential problem is challenging, especially with multiple organizations; differing, yet interrelated, problems; and the various levels at which problems manifest. Determining the primary problem is critical, however, for sharpening the planning effort and, eventually, taking action toward achieving shared goals.

Collaborative processes require many critical decisions. Those decisions are made at various stages in the process around matters such as membership, planning time, and activity development—all considerations that influence the problem-solving approach. The collective impact process provides guidance around aspects such as its emphasis on cross-sector membership and building on existing activities.

The kinds of dilemmas and related decisions that unfold in a multi-year collaborative such as ChangeScale, however, suggest that simple answers to such considerations may ignore the difficult reality of actual collaborative work. Research on collective impact should identify “dangerous tripwires” and how they might be avoided.9

We have identified several such tripwires, primarily in the problem-defining stage, and described dilemmas encountered in the process. Further research and elaboration on this stage, as well as suggestions related to managing the dilemmas of collaborative work, will help improve the short- and long-term effectiveness of collective impact initiatives.

A Brief History of ChangeScale

Founded in 2011 as the Environmental Education Collaborative, ChangeScale formally launched with an initial core membership that included two representatives from each of nine partner organizations working in the environmental education field in the San Francisco and Monterey Bay region. This group met 14 times between November 2011 and December 2014.

Over the course of those meetings, the core group developed a theory of change, vision statement, and initial strategic plan. In 2012, ChangeScale hired a full-time director to provide backbone support for the initiative. In 2014, the group expanded its staff and began to implement activities, including region-wide professional development workshops, now conducted on a quarterly basis.

By early 2015, ChangeScale developed an actionable business plan accompanied by a revised, expanded governance plan and membership structure. With ChangeScale’s support, communities of practice that coalesce local teams of environmental education providers and cross-sector school district partnerships took root that year as well. Over the years, the planning-and-implementation phases have involved local practitioners, researchers, and funders through working groups, community listening sessions, community events, and professional development opportunities.

Notes